Hi there! I am Tianyu Liu, a fourth-year joint Ph.D. student at USTC and Shanghai AI Laboratory, supervised by Sun Xiao.

My research interests mainly lie in efficient inference for LLMs. I am working on speculative decoding, a promising technique for accelerating LLM inference, and I am open to collaborations on related inference topics.

🔥 News

- 2026.01: Released four preprints: TALON, KALE, Double, and HIPPO!

- 2025.10: Released nano-PEARL, an engineering follow-up to PEARL with multi-GPU draft-target disaggregation.

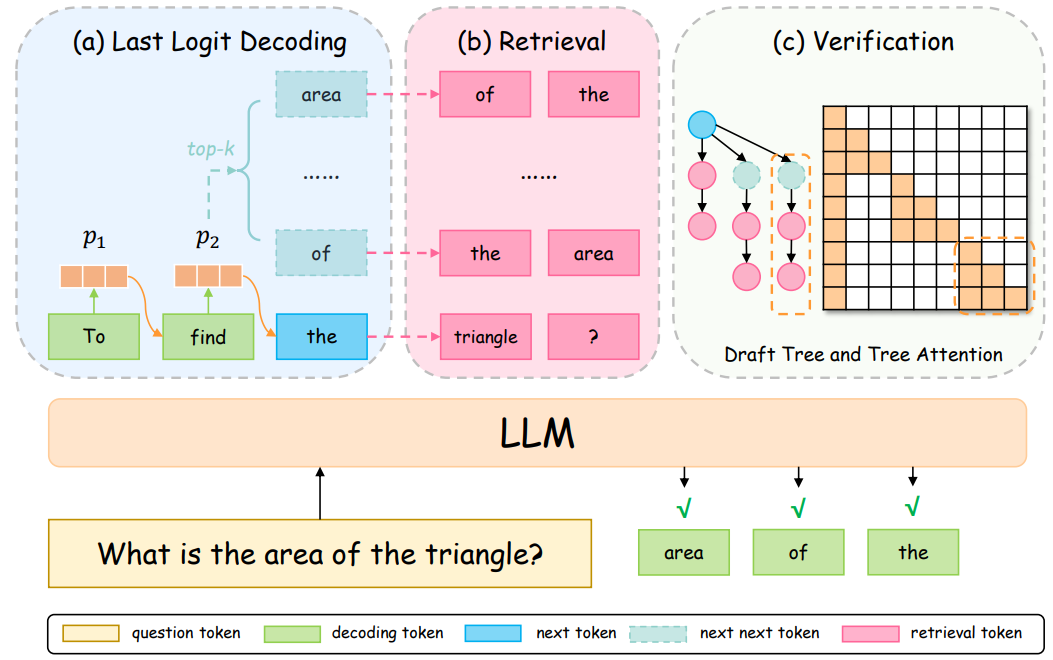

- 2025.07: Preprint LogitSpec to Arxiv, a training-free retrieval-based speculative decoding method!

- 2025.01: PEARL is accepted to ICLR 2025!

- 2025.01: One paper accepted to NAACL 2025. Thanks for the carry of Qitan!

- 2023.09: REST is accepted to NeurIPS 2023!

📝 Selected Publications

†: corresponding author; *: equal contribution

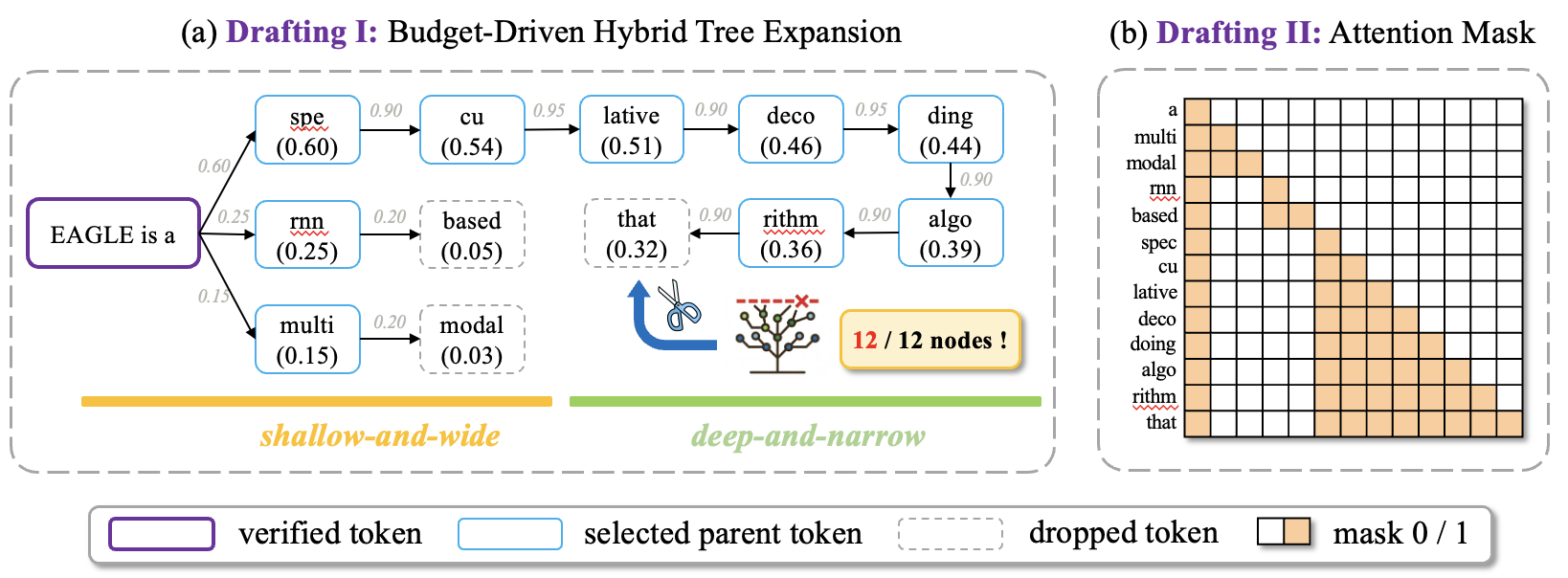

TALON: Confidence-Aware Speculative Decoding with Adaptive Token Trees

Tianyu Liu*, Qitan Lv*, Yuhao Shen, Xiao Sun†, Xiaoyan Sun

🗂 Other Publications

arXiv 2025 HIPPO: Accelerating Video Large Language Models Inference via Holistic-aware Parallel Speculative Decoding

Qitan Lv*, Tianyu Liu*, Wen Wu, Xuenan Xu, Bowen Zhou, Feng Wu, Chao Zhang

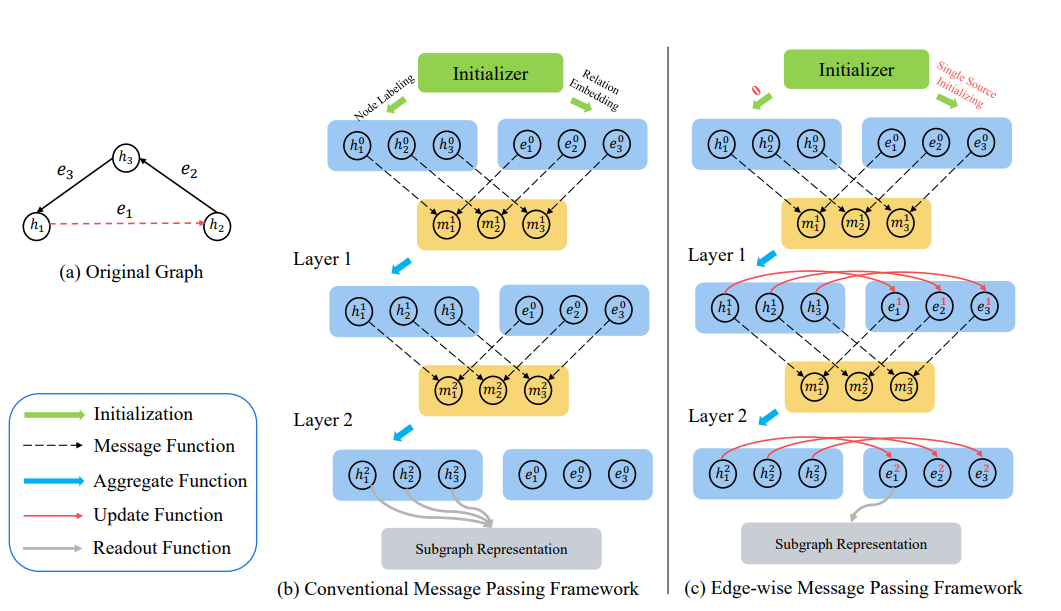

arXiv 2025 KALE: Enhancing Knowledge Manipulation in Large Language Models via Knowledge-aware Learning

Qitan Lv*, Tianyu Liu*, Qiaosheng Zhang, Xingcheng Xu, Chaochao Lu

arXiv 2025 Double: Breaking the Acceleration Limit via Double Retrieval Speculative Parallelism

Yuhao Shen, Tianyu Liu, Junyi Shen, Jinyang Wu, Quan Kong, Li Huan, Cong Wang

arXiv 2025 Speculative Decoding via Hybrid Drafting and Rollback-Aware Branch Parallelism

Yuhao Shen, Junyi Shen, Quan Kong, Tianyu Liu, Yao Lu, Cong Wang

NAACL 2025 Exploiting Edited Large Language Models as General Scientific Optimizers

📖 Educations

- 2022.09 - Present, Ph.D. in Department of Electronic Engineering and Information Science, University of Science and Technology of China.

- 2018.09 - 2022.06, B.Sc. in Computer Science and Technology, Central University of Finance and Economics.

🖥️ Professional Services

- Conference reviewer for ICLR'25, ICLR'26, WWW'25, NeurIPS'25.